Advertisement

Nvidia halts self driving tests after uber crash – Nvidia halts self-driving tests after Uber crash. The news sent shockwaves through the autonomous vehicle industry, raising serious questions about the safety and reliability of self-driving technology. This incident, involving an Uber self-driving car utilizing Nvidia’s technology, highlights the complex interplay of human error, technological limitations, and the urgent need for robust safety protocols in this rapidly evolving field. The crash, resulting in injuries and significant damage, has prompted a thorough review of Nvidia’s testing procedures and a reevaluation of the ethical and legal implications of autonomous vehicles.

The specifics of the Uber crash, including the roles played by Nvidia’s technology, Uber’s safety protocols, and the human operator, are crucial in understanding the full extent of the situation. Nvidia’s subsequent decision to halt testing underscores the gravity of the event and the company’s commitment to addressing the underlying issues. This pause in testing, however temporary, will undoubtedly impact the timeline for the release of future autonomous vehicle technologies and the overall development of the industry.

The Uber Crash Incident

The March 2018 Uber self-driving car accident in Tempe, Arizona, remains a stark reminder of the challenges and risks associated with autonomous vehicle technology. This incident, involving a Volvo XC90 equipped with Uber’s self-driving system and incorporating some Nvidia technology, tragically highlighted the complexities of transitioning from human-driven to autonomous transportation. While Nvidia’s role was indirect, the subsequent halt of their self-driving tests underscores the gravity of the situation and the industry’s ongoing struggle for safety.

Circumstances of the Uber Self-Driving Crash

The accident occurred at night on a clear, relatively well-lit road. Elaine Herzberg, a pedestrian pushing a bicycle, stepped into the path of the Uber self-driving vehicle. The vehicle, operating in autonomous mode with a safety driver behind the wheel, failed to adequately detect and react to Ms. Herzberg’s presence, resulting in a fatal collision. The speed of the vehicle at impact was a significant factor in the severity of the accident. Reports indicate the safety driver, Rafaela Vasquez, was reportedly looking down at her phone moments before the collision. This lack of immediate attention from the safety driver, compounded by the autonomous system’s failure, led to the tragic outcome.

Injuries and Damages

The accident resulted in the death of Elaine Herzberg. The Uber vehicle sustained significant front-end damage. Beyond the immediate physical damage, the incident caused widespread public concern about the safety and reliability of self-driving technology, impacting public perception and regulatory scrutiny of the autonomous vehicle industry. The legal and financial ramifications for Uber were substantial, including lawsuits and investigations.

Timeline of Events

The timeline surrounding the Uber crash begins with the vehicle’s deployment in autonomous mode. Leading up to the crash, the vehicle’s sensors and software were processing data from its environment. The crucial moment involved the failure of the system to adequately identify and react to the pedestrian. The impact occurred immediately afterward. In the immediate aftermath, emergency services were contacted, and an investigation was launched. Uber temporarily suspended its self-driving program following the accident. Subsequent investigations and legal proceedings unfolded over an extended period.

Roles in the Uber Self-Driving Crash

| Factor | Nvidia’s Technology | Uber’s Safety Protocols | Human Operator (Safety Driver) |

|---|---|---|---|

| Role in the Incident | Provided some underlying computing infrastructure, the exact extent of which remains unclear and was not directly implicated in the failure to stop. | Safety protocols failed to prevent the accident, highlighting deficiencies in the system’s ability to handle unexpected situations and the lack of sufficient human oversight. | The safety driver’s inattention contributed significantly to the accident, as reports suggest she was looking down at her phone moments before the impact. |

| Responsibility | Indirect; provided technology components but not directly responsible for the operational decisions of the autonomous system. | Direct; responsible for the design, testing, and operation of the self-driving system and the overall safety protocols. | Direct; responsible for monitoring the autonomous system and intervening if necessary. |

| Outcome | Halted self-driving tests following the incident, indicating a reassessment of safety protocols and technology integration. | Significant reputational damage, legal repercussions, and a reevaluation of safety protocols and operational procedures. | Involved in the investigation, faced legal scrutiny, and potentially disciplinary action due to inattention. |

Nvidia’s Involvement

Source: carbuzzimages.com

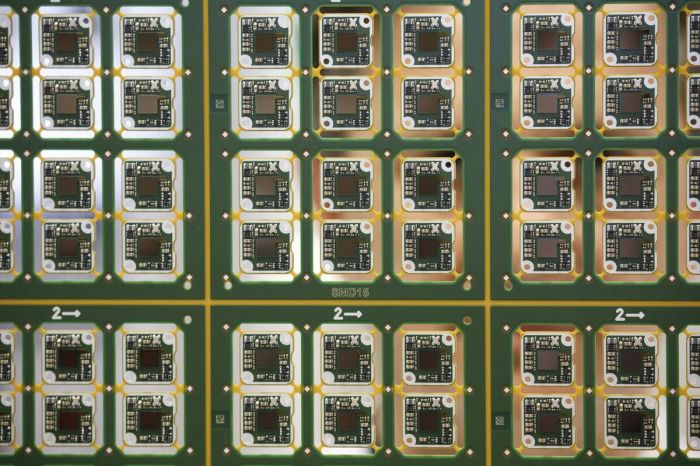

The Uber self-driving car crash tragically highlighted the complexities of autonomous vehicle technology, and Nvidia’s role in the system’s development and deployment warrants close examination. While not solely responsible for the vehicle’s operation, Nvidia provided crucial hardware and software components that played a significant part in the car’s perception and decision-making capabilities.

Nvidia’s contribution to the Uber self-driving system primarily involved its Drive PX platform, a powerful computing platform designed for autonomous vehicles. This platform, at the time of the accident, likely incorporated several key technologies, including high-performance GPUs for processing sensor data, deep learning algorithms for object detection and classification, and software frameworks for managing the overall system. The Drive PX platform essentially acted as the “brain” of the self-driving car, processing information from various sensors like cameras, lidar, and radar to build a real-time 3D representation of the environment and make driving decisions.

Nvidia Drive PX Platform Capabilities

The Nvidia Drive PX platform, a key component in Uber’s autonomous vehicle system, offered several functionalities crucial for safe and efficient self-driving. Its high-performance GPUs enabled the processing of massive amounts of sensor data in real-time, allowing the vehicle to perceive its surroundings with a high degree of accuracy. This included the ability to detect and classify objects such as pedestrians, vehicles, and traffic signals, crucial for navigation and collision avoidance. The platform also supported sophisticated deep learning algorithms that continuously learned and improved the system’s performance over time. Furthermore, the Drive PX provided a robust software framework for integrating various sensor inputs, managing the vehicle’s control systems, and ensuring reliable operation.

Nvidia’s Testing and Validation Procedures, Nvidia halts self driving tests after uber crash

While specific details of Uber’s internal testing procedures using Nvidia’s technology remain largely undisclosed, Nvidia itself maintains rigorous testing and validation processes for its autonomous vehicle platforms. These typically involve extensive simulations, testing in controlled environments, and real-world testing on closed tracks or public roads under strict supervision. The goal is to identify and mitigate potential issues and ensure the reliability and safety of the technology. However, the exact extent to which these processes were implemented and the level of oversight by Uber remain important unanswered questions following the accident.

Nvidia’s Response to the Crash

Following the Uber crash, Nvidia released a statement expressing its condolences to the victim’s family and stating its commitment to safety in the development of autonomous vehicle technology. The company emphasized its rigorous testing and validation processes and its ongoing work to improve the safety and reliability of its Drive PX platform. Beyond this public statement, Nvidia’s direct involvement in the investigation and any subsequent changes to its development processes or partnerships remain largely confidential, reflecting the sensitive nature of the ongoing legal and technical inquiries.

Impact on Autonomous Vehicle Development

The Uber crash, involving a self-driving car and tragically resulting in a fatality, sent shockwaves through the autonomous vehicle (AV) industry. While AV technology is still in its nascent stages, this incident highlighted the critical need for robust safety measures and rigorous testing protocols, potentially reshaping the trajectory of the industry’s development in both the short and long term. The immediate aftermath saw several companies temporarily halting or re-evaluating their testing programs, a testament to the gravity of the situation and the inherent risks involved.

The crash’s impact extends beyond immediate reactions. It forced a critical examination of the current state of AV technology, prompting a deeper dive into the complexities of sensor fusion, decision-making algorithms, and fail-safe mechanisms. This introspection is likely to accelerate the development of more sophisticated safety features and potentially lead to a more cautious and measured approach to deployment.

Short-Term Consequences

The short-term consequences are largely focused on a slowdown in testing and deployment. Companies are likely to prioritize enhanced safety protocols and more conservative testing strategies. Increased scrutiny from regulators and the public will lead to more stringent internal reviews and potentially delayed timelines for bringing autonomous vehicles to market. Funding for AV startups might also be affected, with investors potentially demanding greater assurances of safety before committing further capital. For example, several companies experienced temporary stock price dips following the incident, reflecting investor concerns.

Long-Term Consequences

In the long term, the crash will likely catalyze significant advancements in AV safety technology. Expect to see increased investment in redundancy systems, improved sensor technologies (like LiDAR and radar), and more robust artificial intelligence algorithms capable of handling unexpected situations. The development of explainable AI (XAI), which allows for better understanding of an AV’s decision-making process, is also likely to receive a boost. This incident could accelerate the move towards a more collaborative approach, with increased data sharing between companies to improve overall safety standards. Think of it as a collective learning experience, forcing the industry to mature more rapidly.

Changes to Safety Regulations and Testing Procedures

The Uber crash will undoubtedly influence future safety regulations and testing procedures. Expect stricter guidelines on testing environments, the inclusion of more diverse scenarios in simulations, and a greater emphasis on fail-safe mechanisms. Regulators might demand more rigorous verification and validation processes before granting permits for autonomous vehicle testing and deployment. The incident could also lead to the development of standardized safety benchmarks and performance metrics for AVs, promoting greater transparency and accountability within the industry. For instance, we might see mandatory requirements for specific sensor configurations or minimum levels of redundancy in critical systems.

Comparison to Other Notable Self-Driving Car Accidents

While the Uber crash stands out due to its fatal outcome, it shares similarities with other notable self-driving car accidents. Many incidents involved failures in perception (misinterpreting objects or environmental conditions) or decision-making (making incorrect maneuvers). However, the Uber crash differed in that it involved a well-funded company testing in a seemingly controlled environment, highlighting the potential for failures even under seemingly ideal circumstances. Other accidents often involved less advanced technology or testing in more challenging environments. The common thread, however, is the need for continuous improvement in sensor technology, algorithmic robustness, and fail-safe mechanisms.

Hypothetical Scenario Preventing the Crash

Imagine the Uber vehicle was equipped with a more sophisticated sensor fusion system that combined data from LiDAR, radar, and cameras with greater accuracy and redundancy. If this system had correctly identified the pedestrian earlier, the vehicle’s decision-making algorithm, enhanced with advanced object recognition and prediction capabilities, could have initiated a timely braking maneuver or swerved to avoid the collision. Further, a robust fail-safe system could have overridden the primary control system in the event of a critical error, preventing the accident. This scenario underscores the importance of investing in multiple layers of safety, combining advanced sensor technology with sophisticated algorithms and reliable fail-safe mechanisms.

Ethical and Legal Ramifications: Nvidia Halts Self Driving Tests After Uber Crash

Source: vox-cdn.com

Nvidia’s halting of self-driving tests after the Uber crash highlights the huge risks in rapidly advancing tech. It’s a stark contrast to Mark Zuckerberg’s rather theatrical apology for the Facebook data scandal, detailed in this article , where the scale of the problem was arguably far greater, yet the response felt… performative. Ultimately, both incidents underscore the need for robust safety measures and ethical considerations in the development of groundbreaking technology.

The Uber crash, involving a self-driving car partially developed with Nvidia technology, thrust the ethical and legal responsibilities surrounding autonomous vehicles into the spotlight. The incident highlighted the complex interplay between technological advancement, human oversight, and the potential for catastrophic consequences. Navigating this new landscape requires careful consideration of both ethical principles and the existing legal frameworks, which are currently struggling to keep pace with the rapid evolution of this technology.

The ethical considerations are multifaceted. Do we prioritize the safety of passengers in autonomous vehicles above all else, even if it means potentially endangering pedestrians or other drivers? How do we program autonomous vehicles to make difficult ethical decisions, such as choosing between two unavoidable harms? The very act of entrusting life-critical decisions to algorithms raises profound questions about accountability, transparency, and the potential for algorithmic bias. Furthermore, the distribution of responsibility in accidents involving autonomous vehicles is far from clear, raising significant legal challenges.

Legal Liabilities in Autonomous Vehicle Accidents

Determining liability in an autonomous vehicle accident is a complex legal puzzle. In the Uber crash, questions arose about the roles of Uber (the operator), Nvidia (the technology provider), and the human safety driver (if any). Was it a software glitch, a hardware malfunction, human error, or a combination of factors? Current legal frameworks are ill-equipped to handle such scenarios, often relying on established precedents that don’t fully address the unique characteristics of autonomous driving systems. This necessitates a clear delineation of responsibilities amongst all involved parties, a process complicated by the intricate supply chains and software integrations inherent in modern autonomous vehicle development. The potential for massive legal battles and costly settlements looms large, impacting not only the companies directly involved but also the broader autonomous vehicle industry.

The Role of Human Oversight in Self-Driving Systems

The debate surrounding the necessity and extent of human oversight in self-driving systems is central to both ethical and legal considerations. While proponents of fully autonomous vehicles argue that human intervention can introduce errors and slow down progress, the Uber crash underscores the critical role human oversight can play in mitigating risks. A complete absence of human intervention raises concerns about accountability and the potential for unforeseen circumstances to overwhelm the capabilities of even the most sophisticated algorithms. The optimal level of human oversight remains a topic of intense debate, with various approaches being explored, from fully autonomous systems to those requiring constant human monitoring. The balance between technological advancement and safety remains a critical consideration.

Potential Improvements to the Legal Framework

The current legal framework governing autonomous vehicle testing and deployment is inadequate to address the unique challenges posed by this technology. Significant improvements are needed to ensure public safety and clarify liability.

- Establish clear guidelines for data collection and usage during autonomous vehicle testing, ensuring transparency and accountability.

- Develop a comprehensive legal framework for determining liability in accidents involving autonomous vehicles, considering the roles of all involved parties.

- Implement rigorous safety testing and certification standards for autonomous vehicle systems, addressing both hardware and software components.

- Create a regulatory body specifically tasked with overseeing the development and deployment of autonomous vehicles, ensuring consistent standards and effective enforcement.

- Develop a system for reporting and investigating accidents involving autonomous vehicles, enabling data-driven improvements in safety.

Nvidia’s Halt in Testing

The Uber crash, a stark reminder of the complexities and risks inherent in autonomous vehicle technology, sent shockwaves through the industry. For Nvidia, a key player in providing AI technology for self-driving cars, the incident triggered a significant response: a complete halt to its own self-driving tests. This wasn’t a knee-jerk reaction; it was a calculated move born from a need for thorough review and reassessment.

Nvidia’s decision to pause its autonomous vehicle testing stemmed from a multifaceted evaluation of the Uber accident’s implications for its own technology and safety protocols. The company likely recognized the potential for similar unforeseen circumstances to arise in its own testing program, highlighting vulnerabilities in its systems or a need for more robust safety measures. Furthermore, the intense public and regulatory scrutiny following the crash undoubtedly influenced Nvidia’s decision to proactively demonstrate a commitment to safety and transparency.

Scope and Duration of the Testing Halt

The exact scope and duration of Nvidia’s testing halt weren’t publicly disclosed with granular detail. However, it’s reasonable to assume the pause encompassed all active self-driving tests utilizing Nvidia’s Drive platform. This would include various testing scenarios, from controlled environments to real-world road tests. The duration likely lasted several weeks, allowing for a comprehensive internal review and potential software updates. The company prioritized a thorough investigation over maintaining an aggressive testing schedule. A similar scenario occurred with Tesla in 2021, when they temporarily suspended some Autopilot features following a series of accidents. This shows that even industry giants prioritize safety over speed in the face of serious incidents.

Nvidia’s Internal Review Process

Following the halt, Nvidia initiated a rigorous internal review process. This likely involved multiple teams, including software engineers, safety experts, and legal counsel. The review focused on several key areas: a detailed analysis of the Uber crash report, a comprehensive assessment of Nvidia’s own safety protocols and algorithms, and an evaluation of the data collected from its own testing programs. The goal was to identify any potential weaknesses in Nvidia’s technology or operational procedures that might contribute to similar accidents. This meticulous examination aimed to prevent future incidents and enhance the safety and reliability of its autonomous driving platform. The process might have included simulations, code reviews, and potentially even hardware modifications to improve safety features.

Impact on Nvidia’s Timeline

The testing halt undoubtedly impacted Nvidia’s timeline for future autonomous vehicle technology releases. While the exact delay remains undisclosed, it’s likely to have caused a setback, pushing back deadlines for software updates, hardware integrations, and overall product launches. The internal review process, while crucial, consumed time and resources that could have been allocated to development. This delay, however, is a calculated cost of prioritizing safety and ensuring the reliability of its technology. Similar delays have been seen in other industries following safety-related incidents, demonstrating the precedence placed on responsible development practices. For example, the Boeing 737 MAX grounding resulted in significant delays and financial impacts, illustrating the consequences of prioritizing speed over safety.

Visual Representation of the Crash

Source: bwbx.io

The Uber self-driving vehicle, a Volvo XC90, impacted a pedestrian crossing the street outside of marked crosswalks. The collision occurred at a relatively low speed, but the impact was sufficient to cause significant injuries to the pedestrian. The scene was chaotic, with emergency responders quickly arriving to provide medical assistance and secure the area. The aftermath presented a stark contrast between the seemingly innocuous nature of the surrounding environment and the severity of the accident.

The physical damage to the Volvo was concentrated on the front-left side, where the impact occurred. The bumper was severely crushed, and the hood was dented and possibly raised. Shattered glass from the headlights and windshield likely littered the immediate area. The pedestrian, sadly, sustained visible injuries. The overall impression was one of sudden, unexpected violence juxtaposed against the ordinary backdrop of a city street.

Points of Potential System Failure

The accident highlights several potential points of failure within the autonomous driving system. First, the system’s perception capabilities may have been compromised. Perhaps the sensors, including lidar, radar, and cameras, failed to adequately detect the pedestrian in time, possibly due to limitations in their range, accuracy, or processing speed in challenging lighting or environmental conditions. This could have resulted in a delayed or absent warning to the backup driver.

Second, the system’s decision-making algorithms might have been flawed. Even if the pedestrian was detected, the system may have misjudged the pedestrian’s trajectory or speed, leading to an incorrect assessment of the risk and a failure to initiate appropriate braking or evasive maneuvers. This points to potential weaknesses in the software’s ability to handle complex, unpredictable scenarios involving moving objects. Third, the system’s response time could have been insufficient. Even if the system correctly identified the pedestrian and calculated the necessary response, the delay in executing the braking or steering commands might have been too long to prevent the collision. This underscores the importance of rapid and reliable system response in critical situations. Finally, the failure of the backup driver to intervene effectively represents a critical failure of the safety mechanism intended to prevent such accidents.

Epilogue

The Uber crash, and Nvidia’s subsequent halt in self-driving tests, serves as a stark reminder of the challenges and risks inherent in the development of autonomous vehicle technology. While the pursuit of self-driving cars holds immense promise, ensuring safety and addressing ethical and legal concerns remains paramount. The industry must learn from this incident, implementing stricter safety protocols and fostering greater transparency to rebuild public trust and pave the way for a safer future of autonomous driving.