Facebook changing smart speakers privacy? Yeah, it’s a bigger deal than you think. We’re diving deep into how Facebook’s smart speaker data collection compares to giants like Amazon and Google, uncovering the sneaky ways they gather info and what you can actually *do* about it. Think you’re safe? Think again. This isn’t just about cookies anymore; it’s about your whole damn living room.

This article unpacks Facebook’s data collection practices, examining the types of information gathered, the methods used to (allegedly) anonymize it, and the potential security risks involved. We’ll walk you through adjusting your privacy settings, explore the ethical implications, and compare Facebook’s approach to that of competitors. Get ready to rethink your relationship with your smart speaker.

Facebook’s Smart Speaker Data Collection Practices: Facebook Changing Smart Speakers Privacy

Source: mashable.com

Facebook’s foray into the smart speaker market, while less dominant than Amazon’s Alexa or Google’s Assistant, raises important questions about data privacy. Understanding the type and extent of data collected by Facebook’s smart speakers is crucial for informed consumer choices. This analysis compares Facebook’s data practices with industry leaders to highlight key similarities and differences.

Types of Data Collected by Facebook’s Smart Speakers

Facebook’s smart speakers, like their other products, collect a range of user data to personalize experiences and improve services. This includes voice recordings (obviously!), which are analyzed for both content and context. Beyond the spoken words, metadata such as the time and location of voice commands are also recorded. Device usage data, including the frequency and types of commands used, further contributes to the data profile built around each user. This data is linked to the user’s Facebook account, further enriching the profile with existing information. This interconnectedness is a key differentiator from some competitors.

Comparison with Other Smart Speaker Manufacturers

Compared to Amazon and Google, Facebook’s data collection practices share some similarities but also exhibit key differences. All three companies collect voice recordings and metadata. However, Amazon and Google have faced scrutiny for their extensive use of this data for targeted advertising and product development. While Facebook also uses this data for advertising, their existing profile on users from their social media platform arguably makes their data collection less reliant on solely smart speaker usage. The extent of data sharing across Facebook’s ecosystem is a significant point of contention, as it potentially allows for a more comprehensive user profile than either Amazon or Google can independently build.

Data Anonymization and Pseudonymization Methods

Facebook employs various techniques to anonymize or pseudonymize user data, aiming to protect individual identities. These methods involve removing personally identifiable information (PII) like names and addresses from the datasets. However, the effectiveness of these techniques is often debated, as the combination of metadata and other information can still allow for re-identification in certain circumstances. Facebook’s claim to anonymize data needs to be examined critically, considering the potential for re-identification through advanced data analysis. Transparency around their anonymization methods is vital for building user trust.

Comparison of Data Collection Practices

| Feature | Amazon | ||

|---|---|---|---|

| Voice Recordings | Collected and analyzed | Collected and analyzed | Collected and analyzed |

| Metadata (Time, Location) | Collected | Collected | Collected |

| Device Usage Data | Collected | Collected | Collected |

| Data Linking to User Accounts | Linked to Facebook account | Linked to Amazon account | Linked to Google account |

| Anonymization/Pseudonymization | Claimed, but effectiveness debated | Employs various techniques | Employs various techniques |

Privacy Settings and User Control

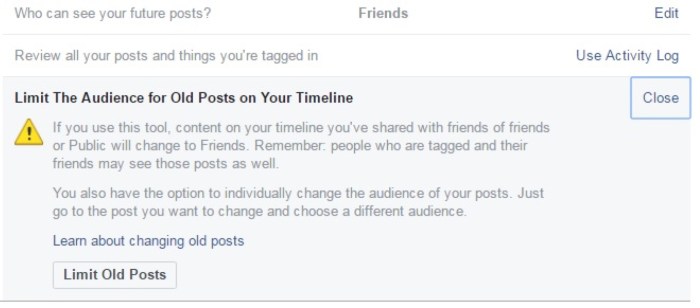

Navigating the privacy settings of Facebook’s smart speakers can feel like decoding a secret government document. But fear not, fellow internet explorer! We’re here to break down the process and give you the power to control your data. Understanding these settings is crucial for maintaining your digital privacy in an increasingly connected world. This isn’t about being paranoid; it’s about being informed and proactive.

Facebook’s smart speaker privacy settings aren’t exactly intuitive. They’re scattered across different menus and often buried within lengthy policy documents. However, once you understand the layout, managing your privacy becomes much more manageable. Remember, your data is valuable, and you have the right to decide how it’s used.

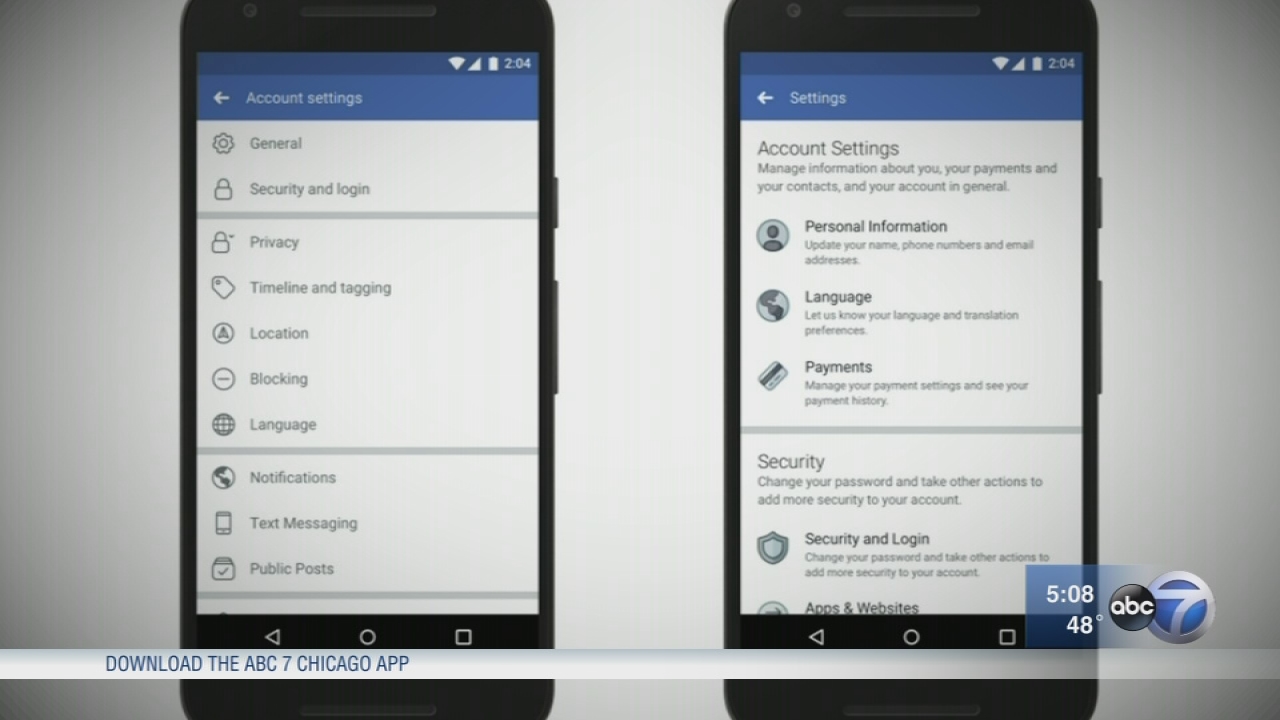

Adjusting Privacy Settings on Facebook’s Smart Speakers: A Step-by-Step Guide

Let’s assume you’re using a hypothetical Facebook smart speaker called “Portal Pal.” The exact steps may vary slightly depending on the specific device and software version, but the general principles remain consistent. First, you’ll typically access the settings through the Portal Pal’s companion app on your smartphone. Within the app, look for a section labeled “Privacy,” “Settings,” or something similar. Once inside, you’ll find options to manage voice recording history, location services, and data sharing with third-party apps. You can typically choose to disable voice recording entirely, limit location tracking to only when the device is actively used, and selectively allow or deny data access for specific apps. Each setting usually offers a clear explanation of its function. Remember to check for updates to the app, as new features and privacy options are regularly added.

Limiting Data Collection by Facebook’s Smart Speakers

One of the most effective ways to limit data collection is to disable features you don’t need. For example, if you’re not using the smart speaker’s location services, turn them off. This prevents the device from constantly tracking your whereabouts. Similarly, if you’re uncomfortable with voice recordings being stored, disable that feature. Many smart speakers offer the option to delete your voice history regularly or even automatically. By selectively disabling these features, you significantly reduce the amount of data collected by Facebook. Consider this: if you only use your smart speaker for playing music, you don’t need location services or voice assistants actively listening for commands.

Transparency of Facebook’s Privacy Policies Regarding Smart Speaker Data

Facebook’s privacy policies, while extensive, are often criticized for their complexity and lack of clarity. Finding specific information about smart speaker data collection can require significant effort. The policies often use technical jargon and bury crucial details within lengthy legal documents. While Facebook claims transparency, the reality is that many users struggle to understand the extent of data collection and its implications. Independent organizations regularly analyze Facebook’s privacy policies and offer simplified explanations to help users navigate the complexities. Searching for independent analyses of Facebook’s smart speaker privacy policy can provide a clearer picture.

User Rights Concerning Data Collected by Facebook’s Smart Speakers

Understanding your rights is paramount. While the specifics may vary by jurisdiction, generally, you have the right to:

- Access your data: Request a copy of the data Facebook has collected about your usage of the smart speaker.

- Correct inaccuracies: If the data is incorrect, you have the right to request corrections.

- Delete your data: In many cases, you can request the deletion of your data, though this may not always be fully comprehensive.

- Object to processing: You may have the right to object to the processing of your data for certain purposes, such as targeted advertising.

- Restrict processing: You might be able to limit how Facebook uses your data.

These rights are often Artikeld in Facebook’s privacy policy and may be further explained in your local data protection laws. Remember to consult those resources for the most accurate and up-to-date information.

Data Security and Potential Vulnerabilities

Source: abcotvs.com

Let’s be real, connecting a microphone to the internet—especially one tied to a company like Facebook—raises some serious eyebrows. Smart speakers, while convenient, are essentially always-listening devices, making data security a paramount concern. Understanding the potential risks and Facebook’s efforts to mitigate them is crucial for any user considering integrating this technology into their home.

Facebook’s smart speakers, like any internet-connected device, face a range of potential security vulnerabilities. These vulnerabilities could lead to unauthorized access to user data, eavesdropping on private conversations, or even manipulation of the device itself. The stakes are high, considering the intimate nature of information often shared in the home environment.

Potential Security Risks Associated with Facebook’s Smart Speaker Data

The potential risks extend beyond simple data breaches. For example, a sophisticated attack could exploit vulnerabilities in the device’s software or network connection to gain unauthorized access to the speaker’s microphone and capture private conversations. Furthermore, compromised devices could be used as part of larger botnets, contributing to distributed denial-of-service attacks or other malicious activities. Data breaches could expose sensitive personal information, including conversations, location data, and linked accounts, potentially leading to identity theft or other forms of fraud. Even seemingly minor vulnerabilities could be exploited to track user activity or deliver targeted advertising in a more invasive way.

Facebook’s Data Protection Measures

Facebook employs several measures to protect user data from unauthorized access. These include encryption of data both in transit and at rest, regular security audits, and vulnerability patching. They also utilize various authentication protocols to verify user identities and prevent unauthorized access to accounts. Furthermore, they claim to employ advanced threat detection systems to identify and respond to potential security threats in real-time. The effectiveness of these measures, however, remains a subject of ongoing scrutiny and debate among security experts.

Comparison with Industry Best Practices, Facebook changing smart speakers privacy

While Facebook claims to adhere to industry best practices, the specifics of their security protocols are not always publicly available. Compared to other smart speaker manufacturers, a direct comparison is difficult due to the lack of transparency regarding the implementation details of security measures. Industry best practices generally emphasize robust encryption, regular security updates, and independent security audits. The degree to which Facebook’s practices align with these standards is a matter of ongoing discussion and assessment.

Potential Vulnerabilities and Facebook’s Mitigation Efforts

Below is a list of potential vulnerabilities and the corresponding mitigation strategies reportedly employed by Facebook. It’s important to remember that the effectiveness of these strategies is constantly evolving and subject to change.

Facebook’s tightening grip on smart speaker privacy is raising eyebrows, especially considering the potential for misuse. This echoes concerns about constant connectivity, which is why the proposed law, proposed law right to disconnect new york , is so crucial. Ultimately, the fight for digital privacy extends beyond just Facebook’s latest moves; it’s about reclaiming control over our data in a hyper-connected world.

| Potential Vulnerability | Facebook’s Reported Mitigation Strategy |

|---|---|

| Software vulnerabilities leading to remote code execution | Regular software updates and penetration testing |

| Network vulnerabilities allowing unauthorized access | Secure network protocols and firewalls |

| Microphone eavesdropping via malware | Anti-malware software and threat detection systems |

| Data breaches compromising user data | Data encryption and access control mechanisms |

Ethical Considerations and User Consent

Facebook’s foray into the smart speaker market raises significant ethical questions surrounding data collection. The always-listening nature of these devices, coupled with Facebook’s extensive data-gathering practices, presents a potential privacy minefield for users. The inherent tension lies in balancing the convenience and functionality offered by these devices against the potential for intrusive surveillance and misuse of personal information.

The ethical implications are multifaceted. Data collected from smart speakers can reveal intimate details about users’ lives, including conversations, routines, and preferences. This information, if improperly handled or accessed, could be used for targeted advertising, manipulation, or even more serious forms of exploitation. The potential for algorithmic bias further compounds these concerns, as algorithms trained on biased data can perpetuate and amplify existing societal inequalities. For example, if a smart speaker’s voice recognition system struggles to understand certain accents, it could lead to discriminatory outcomes.

Informed Consent Procedures for Data Collection

Obtaining truly informed consent from users regarding data collection is a complex process. Facebook needs to provide clear, concise, and easily understandable information about what data is collected, how it is used, and with whom it is shared. This information should be presented in a way that avoids jargon and technical terms, ensuring accessibility for all users. Moreover, the consent process should be actively solicited, not implied through pre-selected settings or confusing opt-out options. A truly informed consent model would empower users to make conscious choices about their data, allowing them to opt out of specific data collection practices or limit the use of their data for certain purposes. Current practices often fall short of this ideal, relying heavily on lengthy privacy policies that many users don’t read or fully understand.

Relevant Legal Frameworks and Facebook’s Compliance

Several legal frameworks govern data privacy, including the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. These regulations establish specific requirements for data collection, storage, and use, including the need for transparency, user consent, and data security. Facebook’s smart speaker data practices must comply with these laws, and any discrepancies could lead to significant legal and reputational consequences. For instance, failing to provide users with adequate control over their data or experiencing a data breach could result in substantial fines and legal action. The company’s adherence to these legal frameworks is constantly scrutinized, and any deviation could severely damage its credibility and trust with users.

Alignment with Ethical Guidelines for Data Privacy

Facebook’s current data practices concerning smart speakers, judged against established ethical guidelines, present a mixed picture. While the company has made efforts to improve data security and transparency, certain aspects fall short of ideal ethical standards. The sheer volume of data collected, the potential for unintended consequences due to algorithmic bias, and the lack of truly granular user control over data usage remain significant concerns. A greater emphasis on data minimization – collecting only the data strictly necessary for the device’s functionality – would be a significant step towards ethical alignment. Furthermore, implementing robust mechanisms for user control and ensuring meaningful data transparency are crucial for achieving better alignment with established ethical guidelines. Independent audits and third-party verification of Facebook’s data practices could further build user trust and demonstrate a commitment to ethical data handling.

Impact on User Behavior and Trust

Facebook’s data collection practices on its smart speakers, while potentially offering personalized experiences, significantly influence user behavior and, more importantly, erode trust. The constant awareness of being listened to, even passively, alters how users interact with the device and the broader Facebook ecosystem. This impact isn’t just a fleeting feeling; it’s a fundamental shift in the user-platform relationship, potentially leading to long-term consequences.

The collection of voice data, coupled with other Facebook data points, creates a detailed profile of the user. This detailed profile is used to target advertising and personalize content, leading users down a path of algorithmic reinforcement. Users might find themselves increasingly exposed to information that confirms their pre-existing biases, limiting exposure to diverse perspectives and potentially influencing their purchasing decisions and even political views. The subtle manipulation of user experience through targeted advertising, based on intimate data gathered from their own homes, is a powerful force shaping behavior.

User Behavior Modification Through Targeted Advertising

The personalization offered by Facebook’s smart speakers is a double-edged sword. While convenient, the hyper-targeted advertising based on voice data can subtly manipulate user choices. For example, a user frequently discussing a particular brand of coffee might be consistently shown ads for that brand, or even for competing brands trying to capture market share. This constant exposure can lead to impulsive purchases or brand loyalty formed not through genuine preference, but through repeated algorithmic reinforcement. Furthermore, the ease of voice-activated shopping could lead to increased spending, as the friction of traditional online purchasing is removed.

Erosion of User Trust and Confidence

Data breaches and privacy concerns surrounding Facebook are already well-documented. The addition of smart speaker data collection only exacerbates these issues. The potential for unauthorized access to sensitive personal information, like conversations about health or financial matters, significantly undermines user trust. News reports of data leaks and questionable data handling practices by Facebook have already damaged public confidence, and the integration of smart speaker data into this existing ecosystem further erodes user trust. The feeling of being constantly monitored within one’s own home can create a sense of unease and distrust, leading users to actively avoid using the device or even the Facebook platform altogether.

Long-Term Consequences on User Relationships with Facebook

The cumulative effect of Facebook’s data practices could lead to a significant decline in user engagement and a growing sense of alienation. As users become more aware of the extent of data collection and its implications, they may choose to limit their interaction with the platform, opting for alternative smart speaker solutions or digital assistants that offer greater privacy assurances. This could result in a long-term shift in the power dynamic between Facebook and its users, with users demanding more transparency and control over their data. The loss of trust could also affect the future success of Facebook’s products and services, as users may become less willing to share personal information or participate in activities that generate data.

Illustrative Depiction of Data Collection’s Impact on User Trust

Imagine a seesaw. On one side sits a smiling user, confidently interacting with a Facebook smart speaker, represented by a bright, friendly-looking device. On the other side is a slowly growing pile of data points – tiny, almost invisible at first, but steadily increasing in size and weight. Each data point represents a conversation, a search, a purchase suggestion, all meticulously collected and analyzed. As the pile grows, the seesaw tilts, slowly pushing the smiling user down and replacing their smile with a concerned frown. The once-bright speaker now appears shadowy and ominous, reflecting the growing distrust and unease as the user realizes the weight of the data collected. The seesaw represents the balance between user convenience and privacy; the increasing data collection tips the scales towards distrust.

Comparison with Competitor Practices

The privacy landscape for smart speakers is a complex one, with each major player adopting a slightly different approach to data collection and usage. Understanding these nuances is crucial for consumers who want to make informed choices about which devices they bring into their homes. While Facebook’s practices have been under scrutiny, a direct comparison with competitors reveals both similarities and significant differences in their handling of user data. This comparison helps paint a clearer picture of the overall smart speaker privacy ecosystem.

Analyzing the data handling practices of major smart speaker manufacturers reveals a spectrum of approaches, ranging from relatively transparent to significantly opaque. Some companies prioritize user control and data minimization, while others collect extensive data for targeted advertising and product improvement. These differences directly impact the level of user trust and the potential risks associated with using these devices.

Smart Speaker Privacy Policy Comparison

The following table summarizes the key privacy aspects of three major smart speaker manufacturers: Amazon (Alexa), Google (Google Assistant), and Apple (Siri).

| Feature | Amazon (Alexa) | Google (Google Assistant) | Apple (Siri) |

|---|---|---|---|

| Data Collected | Voice recordings, device usage data, location data, purchase history (if linked to Amazon account). Data is often anonymized and aggregated for product improvement. | Voice recordings, device usage data, location data, search history (if linked to Google account). Data used for personalized advertising and service improvement. | Voice recordings (only when explicitly requested), limited device usage data. Strong emphasis on on-device processing and encryption. |

| Data Storage | Data stored on Amazon servers, with options for voice recording deletion. | Data stored on Google servers, with options for voice recording deletion and history management. | Data stored on Apple servers with end-to-end encryption for many features. |

| Data Usage | Used for product improvement, targeted advertising, and potentially law enforcement requests. | Used for product improvement, personalized advertising, and various Google services integration. | Primarily used for service improvement, with limited use for advertising. Strong focus on user privacy. |

| User Control | Users can review and delete voice recordings, manage linked accounts, and adjust notification settings. | Users can review and delete voice recordings, manage linked accounts, and adjust privacy settings within Google account. | Users have limited control over data collection, but the default settings prioritize privacy. |

| Transparency | Privacy policy is available, but the complexity can be challenging for average users to fully understand. | Privacy policy is available, but similar to Amazon, can be complex for comprehension. | Apple emphasizes transparency and simplicity in its privacy policy, though some details remain technical. |

Final Review

Source: techcrunch.com

So, is Facebook’s smart speaker privacy a total nightmare? Well, it’s complicated. While they claim to anonymize data and have security measures in place, the sheer volume of information collected raises serious concerns. Ultimately, you need to decide how much you trust Facebook with your daily life – and armed with this knowledge, you can make informed choices about what you allow into your home. Time to take control of your digital privacy, one smart speaker at a time.