Microsoft ban offensive language xbox live skype – Microsoft Ban Offensive Language: Xbox Live & Skype – Ever wondered how a tech giant tackles the toxic swamp of online interactions? This deep dive explores Microsoft’s ongoing battle against offensive language on its massive platforms, Xbox Live and Skype. We’ll dissect their policies, examine enforcement methods, and uncover the surprisingly complex world of online moderation.

From automated filters to human moderators, the fight for a cleaner digital space is far from over. We’ll look at the challenges Microsoft faces, from identifying subtle forms of hate speech to balancing free speech with user safety. Prepare for a rollercoaster ride through the ethical, technological, and legal minefields of online communication.

Microsoft’s Policy on Offensive Language

Source: kinja-img.com

Microsoft’s journey in moderating online communication across Xbox Live and Skype reflects a broader industry struggle to balance freedom of expression with the need for a safe and respectful online environment. Their policies have evolved significantly over time, responding to technological advancements, societal shifts in acceptable language, and increasing user expectations. This evolution highlights the complexities of defining and enforcing standards for online behavior.

Microsoft’s approach to offensive language has been a continuous work in progress. Early iterations of their policies were arguably less robust, relying more on reactive measures than proactive prevention. The rise of online harassment and the increasing sophistication of abusive tactics necessitated a more comprehensive and nuanced approach. This involved investing in advanced moderation tools, refining reporting mechanisms, and developing clearer guidelines for users.

Historical Evolution of Microsoft’s Policies, Microsoft ban offensive language xbox live skype

Microsoft’s policies regarding offensive language on Xbox Live and Skype have gradually become stricter over the years. Initially, enforcement relied heavily on user reports and a relatively simple -based filtering system. This proved insufficient to combat the creativity and persistence of those seeking to circumvent restrictions. Later iterations incorporated machine learning algorithms to identify and flag potentially offensive content more effectively, alongside a more robust appeals process for users who felt their content was unfairly flagged. The company has also increased investment in human moderators to review flagged content and handle complex cases requiring nuanced judgment. This evolution reflects a growing understanding of the limitations of purely automated systems and the need for human oversight in content moderation.

Comparison with Other Online Platforms

Compared to other major online platforms like Facebook, Twitter, and YouTube, Microsoft’s approach sits somewhere in the middle. While not as aggressively proactive as some platforms in preemptively identifying and removing offensive content, their policies are stricter than those of some smaller platforms. For example, Microsoft’s reliance on both automated systems and human moderation mirrors practices employed by Facebook and YouTube, albeit perhaps on a smaller scale given the more focused nature of Xbox Live and Skype compared to the vastness of their social media counterparts. Twitter, known for its more hands-off approach to content moderation, presents a contrasting example. The relative stringency of each platform’s policies often reflects its specific user base and the type of content typically shared.

Types of Offensive Language and Consequences

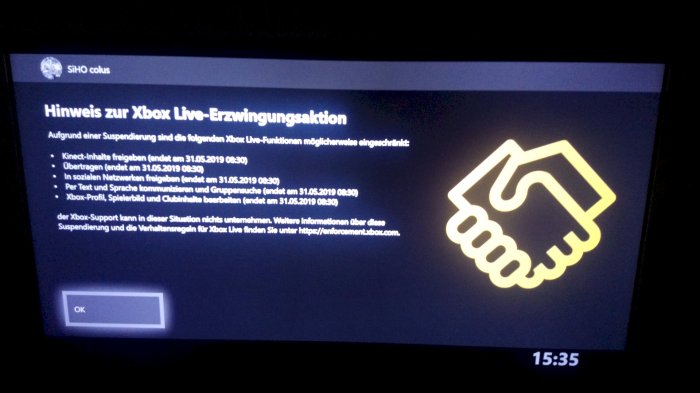

Microsoft’s policies broadly prohibit hate speech, harassment, threats, and sexually explicit content. This includes, but is not limited to, slurs targeting racial, ethnic, religious, or sexual orientation groups; threats of violence or harm; repeated abusive behavior towards other users; and the distribution of sexually suggestive or explicit material. Violations can result in a range of consequences, from temporary suspensions of accounts to permanent bans. The severity of the penalty depends on the nature and frequency of the offense. Repeated violations typically lead to more severe penalties.

A Hypothetical Improved Policy

A refined policy could address gray areas by incorporating a more nuanced approach to context. Currently, automated systems might struggle to distinguish between playful banter and genuinely offensive language. An improved system could utilize more sophisticated natural language processing to understand the intent and context of communication, reducing the risk of false positives. Additionally, the policy could clarify the distinction between protected speech and speech that incites violence or harassment, providing clearer guidelines for users and moderators. This might involve establishing a tiered system of penalties, with warnings for minor infractions and progressively stricter sanctions for repeated or more severe offenses. This system could be coupled with improved user education programs to help users understand the boundaries of acceptable online behavior. For example, a system that considers the history of interaction between users could differentiate between a single outburst and a pattern of abusive behavior.

Enforcement Mechanisms and Effectiveness: Microsoft Ban Offensive Language Xbox Live Skype

Source: neowin.com

Microsoft’s fight against offensive language on Xbox Live and Skype is a constant battle against a flood of user-generated content. The company employs a multi-pronged approach, balancing automated systems with human oversight, but the effectiveness of these methods remains a complex issue. The sheer volume of communication and the evolving nature of offensive language present significant hurdles.

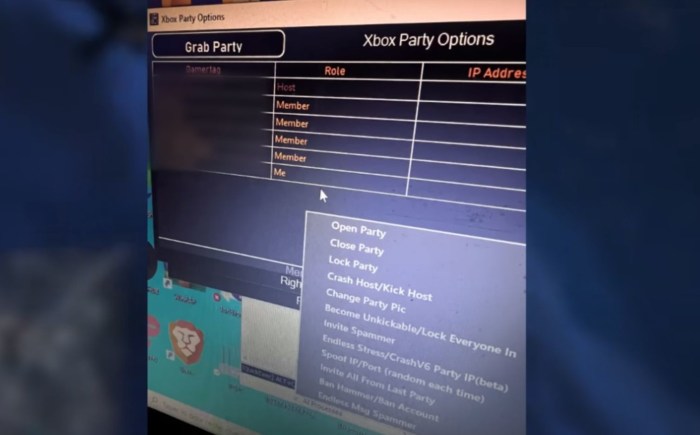

Enforcement of Microsoft’s policy relies on a combination of automated filters, human moderators, and user reporting. Automated filters scan text and voice chat for s and phrases associated with hate speech, harassment, and other violations. Human moderators review flagged content and user reports, making final decisions on whether to take action, such as issuing warnings, suspending accounts, or permanently banning users. User reporting allows players to flag offensive behavior directly, prompting a review by Microsoft’s moderation team. This system, however, isn’t without its flaws.

Automated Filtering and its Limitations

Automated filters, while efficient for processing large volumes of data, are prone to false positives and false negatives. A filter might flag harmless phrases as offensive, leading to unnecessary penalties for users, or it might miss genuinely offensive content due to the ever-changing nature of online slang and coded language. For example, a filter might incorrectly flag a discussion about a historical event containing potentially offensive terminology used in context, while simultaneously failing to identify subtle forms of harassment disguised within seemingly innocuous conversations. This necessitates human review to ensure fairness and accuracy. The reliance on detection also means that sophisticated forms of abuse, which cleverly avoid explicit s, often slip through the cracks.

Human Moderation: Challenges and Scale

Human moderation is crucial for addressing the limitations of automated systems. However, the sheer volume of user-generated content on Xbox Live and Skype makes it incredibly challenging to maintain adequate human oversight. The process is resource-intensive, requiring a large team of moderators to review flagged content and user reports. Furthermore, the task is emotionally taxing, exposing moderators to constant streams of hateful and abusive content. This can lead to burnout and decreased effectiveness over time. There is also the challenge of consistent application of the policy across different moderators and regions, which can lead to discrepancies in enforcement.

User Reporting and Community Involvement

User reporting serves as an important supplementary mechanism, empowering the community to actively participate in content moderation. However, the effectiveness of user reporting hinges on several factors. Firstly, users need to be aware of the reporting mechanisms and feel confident that their reports will be acted upon. Secondly, the reporting process needs to be simple and straightforward, to encourage users to report offenses promptly. Finally, Microsoft needs to provide feedback to reporters, to maintain trust and transparency in the system. The lack of feedback or apparent inaction can lead to a sense of futility among users and reduce the effectiveness of the reporting system.

Comparison of Enforcement Strategies

| Enforcement Strategy | Strengths | Weaknesses | Effectiveness |

|---|---|---|---|

| Automated Filters | High throughput, cost-effective for initial screening | High false positive/negative rate, easily circumvented by sophisticated abusers | Moderate, requires human oversight |

| Human Moderation | High accuracy, can handle nuanced cases | High cost, low throughput, potential for bias and inconsistency | High, but limited by scale |

| User Reporting | Leverages community involvement, identifies hidden abuse | Relies on user participation, can be prone to abuse and bias | Variable, depends on user engagement and response time |

User Experience and Impact

Source: consoleshub.com

Microsoft’s crackdown on offensive language across Xbox Live and Skype is a big move towards a more civil online environment. It’s interesting to consider this in light of Craigslist’s decision to shut down its personals section, as detailed in this article: craigslist closes dating ads section u s. Both actions reflect a growing awareness of the need to curb harmful online interactions, even if the approaches differ significantly.

Ultimately, the goal remains the same: fostering safer digital spaces.

The pervasive nature of online interactions means that the experience of encountering offensive language on platforms like Xbox Live and Skype significantly impacts millions of users. The emotional toll, coupled with the potential for harassment and the erosion of community spirit, demands a thorough examination of its effects. This section delves into user experiences, the overall impact on platform usability, and the potential psychological consequences of online exposure to offensive language.

Understanding the user experience surrounding offensive language requires looking at both the direct victims and the wider community. The impact extends beyond individual incidents, shaping the overall atmosphere and influencing how people engage with these platforms.

User Experiences with Offensive Language

Direct exposure to hateful speech online can leave lasting negative impressions. Here are some examples of user experiences reported across various online forums and news articles:

- A gamer consistently subjected to racist slurs during online matches experienced a decline in their enjoyment of the game, eventually leading to them quitting the online community.

- A Skype user was subjected to sustained harassment via private messages, resulting in significant emotional distress and anxiety.

- Multiple users have reported feeling unsafe and unwelcome in online gaming communities due to the prevalence of sexist and homophobic remarks.

Impact on User Experience

The presence of offensive language dramatically alters the user experience on platforms like Xbox Live and Skype. It transforms spaces designed for connection and entertainment into hostile and unwelcoming environments. This has several significant consequences:

Reduced engagement: Users may actively avoid online interactions, reducing the overall activity and vibrancy of the community. This decrease in participation can lead to a decline in user satisfaction and potentially impact the platform’s profitability.

Damaged community atmosphere: A toxic environment fosters distrust and discourages positive interactions. Users are less likely to cooperate, share experiences, or help each other when they fear being targeted by hateful speech.

Decreased platform value: The overall reputation of a platform can suffer when offensive language becomes prevalent. This can affect user retention and attract negative press, damaging the platform’s brand image.

Psychological Effects of Online Offensive Language

Exposure to online hate speech can have profound psychological consequences. The impact extends beyond fleeting annoyance, potentially leading to significant mental health issues.

Increased anxiety and depression: Constant exposure to harassment and hateful messages can trigger anxiety, depression, and feelings of isolation. Victims may experience sleep disturbances, difficulty concentrating, and a general decline in overall well-being. Studies have linked cyberbullying to increased rates of suicide attempts among young people.

Reduced self-esteem: Being the target of online abuse can severely damage a person’s self-esteem and confidence. Constant negativity can lead to feelings of worthlessness and inadequacy, impacting their personal and professional lives.

Trauma and PTSD: In severe cases, prolonged exposure to online harassment can lead to post-traumatic stress disorder (PTSD). Victims may experience flashbacks, nightmares, and avoidance behaviors related to their online experiences.

Positive User Experience Through Effective Moderation

Imagine a scenario where a gamer joins an online match on Xbox Live. In the past, this might have meant bracing themselves for the inevitable barrage of insults. However, thanks to improved moderation systems and AI-powered detection, the chat remains respectful and focused on gameplay. Players are able to collaborate, strategize, and enjoy the game without fear of harassment. This fosters a positive community where players feel safe, respected, and encouraged to participate actively. The overall experience is far more enjoyable, leading to increased user engagement and a stronger sense of community.

Technological Solutions and Innovations

The battle against offensive language online is far from over, and Microsoft, like many tech giants, is constantly evolving its strategies. While human moderators play a crucial role, the sheer volume of data necessitates technological solutions that can sift through mountains of text and identify harmful content swiftly and accurately. This is where artificial intelligence and machine learning step in, promising a future where toxic online interactions are minimized.

AI and machine learning offer a powerful toolkit for detecting and preventing offensive language. These systems can be trained on vast datasets of text, learning to identify patterns and linguistic cues associated with hate speech, harassment, and other forms of abuse. This allows for automated flagging of potentially offensive content, significantly reducing the workload on human moderators and enabling quicker responses to violations. Sophisticated algorithms can even adapt and learn over time, becoming more effective at identifying new and evolving forms of offensive language.

Challenges in AI-Powered Language Moderation

The application of AI to language moderation isn’t without its hurdles. One significant challenge is the inherent bias present in the data used to train these systems. If the training data reflects existing societal biases, the AI model will likely perpetuate and even amplify these biases in its judgments. For example, an AI trained primarily on data from one cultural context might misinterpret expressions or idioms from another, leading to false positives or missed instances of offensive language. Furthermore, the nuanced nature of language makes it incredibly difficult for AI to accurately interpret context. Sarcasm, irony, and humor can easily be misconstrued as offensive, resulting in unfair moderation decisions. The constant evolution of slang and online language also presents a challenge, requiring continuous retraining and updates to the AI models.

A Novel Technological Solution: Contextualized Sentiment Analysis with Multi-lingual Support

To address the limitations of existing systems, a new approach is needed. A proposed solution is a system that combines contextualized sentiment analysis with robust multi-lingual support. This system would leverage advanced natural language processing techniques to understand not just the literal meaning of words, but also the intended sentiment and context within a given message. It would achieve this by analyzing the surrounding text, considering factors like tone, emoji usage, and the history of the conversation. Furthermore, the system would incorporate a diverse range of languages, mitigating biases stemming from a focus on a single language. The system’s limitations would include potential difficulties in interpreting highly nuanced forms of sarcasm or irony, and the need for continuous updates to account for evolving linguistic trends. However, its potential to improve fairness and accuracy in moderation decisions is significant.

Improving Natural Language Processing for Enhanced Accuracy and Fairness

Improvements in natural language processing (NLP) are crucial for advancing the accuracy and fairness of language moderation systems. More sophisticated NLP models, such as those based on transformer architectures, can better understand the context and meaning of language, reducing the likelihood of misinterpretations. Furthermore, incorporating techniques like adversarial training can help identify and mitigate biases in the model. Adversarial training involves exposing the model to examples designed to challenge its assumptions and identify potential weaknesses, forcing it to learn more robust and fair representations of language. This would allow for a system that is less prone to false positives and more effective at identifying truly offensive content, regardless of the linguistic style or cultural context. The application of explainable AI (XAI) techniques could also enhance transparency and accountability, allowing users to understand why a particular message was flagged as offensive.

Community and Moderation Practices

Microsoft’s success in curbing offensive language on Xbox Live and Skype hinges not only on its technical capabilities but also on robust community and moderation practices. A well-designed system needs to foster a sense of shared responsibility and empower users to contribute to a positive online environment, while simultaneously providing clear guidelines and effective enforcement mechanisms. This involves learning from best practices implemented by other platforms and continuously refining internal processes.

Successful community moderation isn’t a one-size-fits-all solution; it requires a multifaceted approach. Effective strategies incorporate technological solutions with proactive community engagement and user empowerment. The balance between automated systems and human moderation plays a crucial role in achieving a positive user experience.

Examples of Successful Community Moderation Practices

Several online platforms have demonstrated effective community moderation strategies. Reddit, for instance, utilizes a combination of automated filters for spam and hate speech alongside a hierarchical moderation system where subreddit moderators play a crucial role in enforcing community rules. Discord employs a similar system, empowering server administrators to set their own rules and moderate their communities. These platforms demonstrate the importance of distributing moderation responsibilities, leveraging the knowledge and engagement of the community itself. Furthermore, platforms like Twitch have implemented robust reporting systems and dedicated moderation teams to address violations swiftly. These teams often utilize sophisticated tools to detect and address violations proactively.

User Reporting and Feedback Mechanisms

Different platforms employ varying approaches to user reporting and feedback. Some utilize simple flagging systems, while others incorporate more sophisticated mechanisms such as tiered reporting (allowing users to escalate reports if initial responses are unsatisfactory). The effectiveness of these systems is dependent on factors such as the clarity of reporting guidelines, the speed of response times, and the transparency of the moderation process. A well-designed system will provide users with clear feedback on their reports, explaining the actions taken (or why no action was taken). Contrastingly, systems lacking in transparency can lead to user frustration and a decline in reporting rates. Effective systems often incorporate mechanisms for user feedback on the moderation process itself, allowing for continuous improvement.

Empowering Users to Moderate Their Own Communities

Empowering users to moderate their own communities can significantly contribute to a more positive online environment. This approach fosters a sense of ownership and responsibility among users, leading to increased self-regulation and a reduction in offensive behavior. However, it’s crucial to provide users with the necessary training, tools, and support to effectively moderate their communities. Clear guidelines, robust reporting mechanisms, and access to moderation resources are essential for this approach to be successful. Furthermore, regular feedback and communication with community moderators can ensure alignment with platform-wide policies and values. This approach is seen as particularly effective in niche communities where users share a common interest and a strong desire to maintain a positive environment.

A Guide for Reporting Offensive Language

Reporting offensive language is crucial for maintaining a positive online environment. Here’s a guide outlining the process:

- Identify the offensive content: This includes hateful speech, harassment, threats, or any other violation of Microsoft’s terms of service.

- Locate the reporting mechanism: This is typically found within the platform’s settings or near the offensive content itself.

- Provide detailed information: Include screenshots, timestamps, and a clear description of the offense.

- Submit the report: Follow the platform’s instructions carefully.

- Expect a response (or lack thereof): Microsoft may or may not provide a direct response to every report, but they will take action based on the severity and nature of the offense.

Legal and Ethical Considerations

Navigating the digital landscape, Microsoft treads a fine line between fostering vibrant online communities and protecting its users from harmful content. This necessitates a careful consideration of the legal and ethical ramifications of regulating speech on its platforms like Xbox Live and Skype. The challenge lies in balancing freedom of expression with the need to create a safe and inclusive environment.

Microsoft faces a complex web of legal challenges in its efforts to moderate online speech. These challenges stem from differing interpretations of free speech laws across jurisdictions, the sheer volume of user-generated content, and the difficulty in consistently applying content moderation policies. Furthermore, the evolving nature of online communication, including the rise of sophisticated methods to circumvent restrictions, adds another layer of complexity.

Legal Challenges in Regulating Online Speech

The legal framework surrounding online speech varies significantly across countries. In some regions, free speech protections are expansive, making it challenging for platforms to remove content without facing legal repercussions. Conversely, other jurisdictions have stricter regulations regarding hate speech and online harassment, potentially exposing Microsoft to liability if it fails to adequately address such content. Determining the appropriate level of intervention while adhering to diverse legal standards presents a significant hurdle. For example, a post deemed acceptable in one country might be illegal in another, forcing Microsoft to navigate a complex legal maze. The company must constantly adapt its policies and enforcement mechanisms to comply with ever-changing regulations globally.

Ethical Implications of Censorship and Freedom of Expression

The act of censorship, even with good intentions, raises fundamental ethical questions about freedom of expression. While removing offensive or harmful content protects users from abuse and promotes a positive online experience, it can also be perceived as an infringement on individual rights. Striking a balance between safeguarding users and upholding the principle of free speech requires careful consideration and transparent policies. The risk of biased moderation or over-censorship, potentially silencing marginalized voices or legitimate dissent, is a constant ethical concern.

Balancing User Protection and Free Speech

Microsoft’s approach to content moderation must carefully balance the competing interests of user safety and free expression. This involves developing clear and consistently applied policies that define what constitutes offensive language, while simultaneously ensuring that legitimate expression is not stifled. Transparency is crucial; users need to understand the rules and the process for appealing moderation decisions. The implementation of robust appeals processes, coupled with continuous review and refinement of policies, is essential to ensuring fairness and accountability. Overly aggressive censorship can lead to backlash and distrust, while inadequate moderation can create a toxic environment.

Hypothetical Case Study: The “Gamergate 2.0” Litigation

Imagine a scenario – “Gamergate 2.0” – where a coordinated online harassment campaign targets a female game developer on Xbox Live and Skype. The campaign involves a deluge of misogynistic and threatening messages, doxxing, and coordinated swatting attempts. Microsoft, after receiving numerous reports, takes down accounts and bans users involved in the campaign. However, some banned users sue Microsoft, arguing that their free speech rights were violated and that the company’s policies are overly broad and inconsistently applied. This hypothetical case highlights the potential legal challenges Microsoft faces: balancing its responsibility to protect users from harassment with the legal right to free speech, even when that speech is offensive or harmful. The outcome would depend on the specifics of the case, including the nature of the content, the application of Microsoft’s policies, and the legal standards in the relevant jurisdiction.

Last Word

Ultimately, Microsoft’s efforts to ban offensive language on Xbox Live and Skype highlight a larger struggle: how do we create truly inclusive online communities while upholding freedom of expression? While technological solutions are improving, the human element remains crucial. Effective moderation requires a blend of robust AI, human oversight, and a community actively engaged in reporting and promoting positive interactions. The fight for a more respectful online world is far from over, but the journey is worth charting.